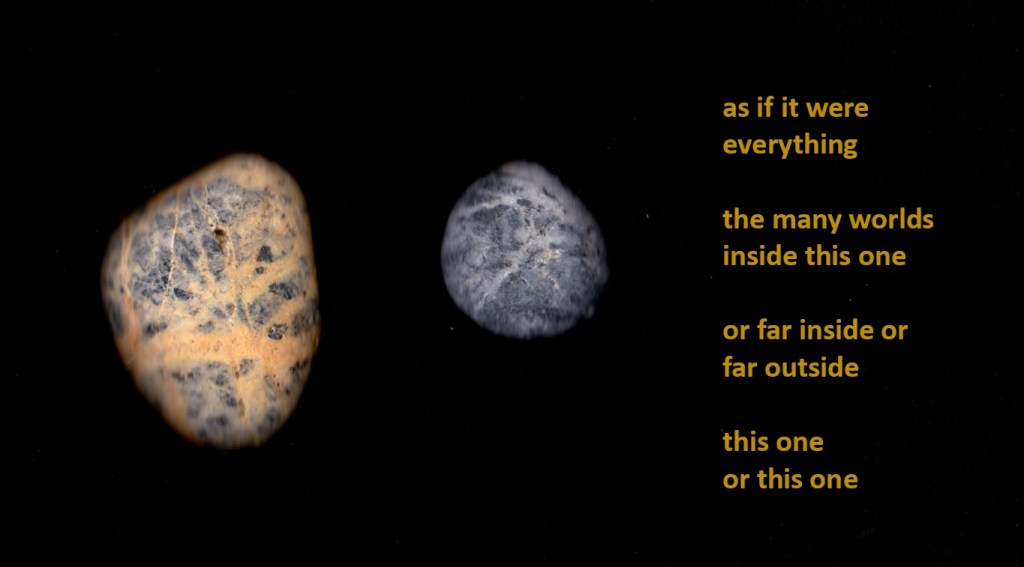

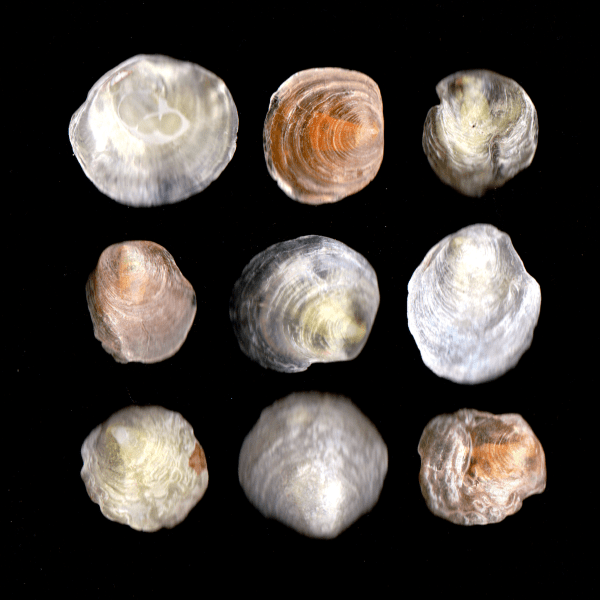

I believe that I now understood in some small measure why the Buddhist goes on pilgrimage to a mountain. The journey is itself part of the technique by which the god is sought. It is a journey into Being; for as I penetrate more deeply into the mountain’s life, I penetrate also into my own. For an hour I am beyond desire. It is not ecstasy, that leap out of the self that makes man like a god. I am not out of myself, but in myself. I am. To know Being, this is the final grace accorded from the mountain.

— Nan Shepard, The Living Mountain, 108, last paragraph